Today I would like us to talk about the special case of the deadlock called key lookup deadlock. This deadlock can happen when multiple sessions are reading and updating the same rows simultaneously. Let us look at the example.

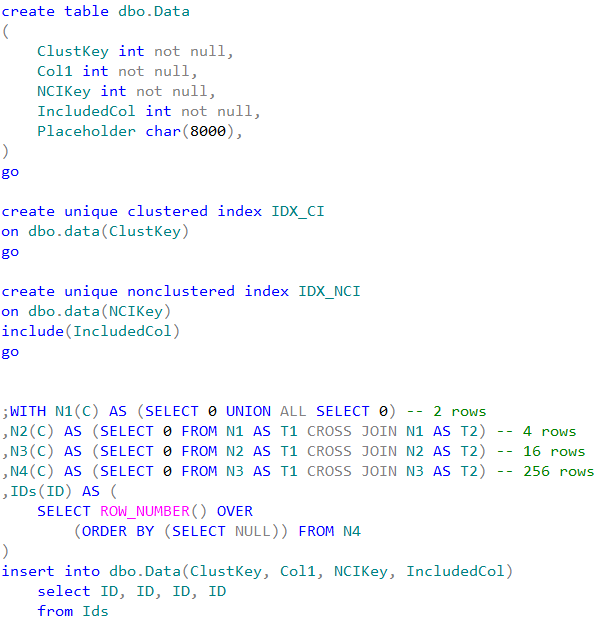

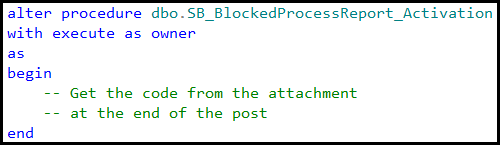

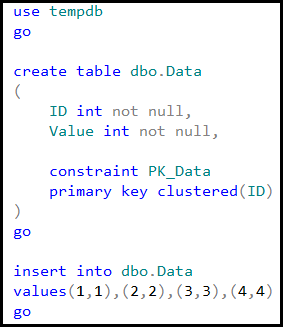

As the first step, let us create the table with the clustered and nonclustered indexes. Nonclustered index has one included column. We are inserting 256 rows there keeping clustered and nonclustered key values the same – from 1 to 256.

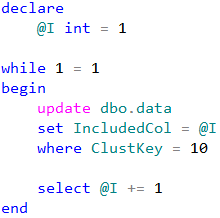

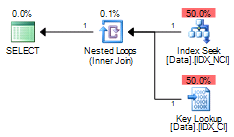

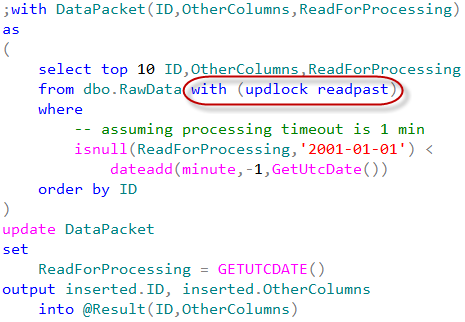

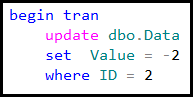

Now let us run two sessions in parallel. In the first session, we are updating the column that included to the nonclustered index using clustered key value in where clause.

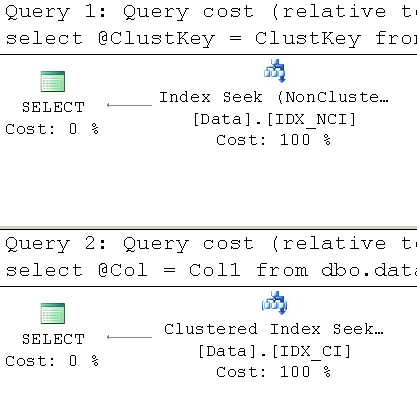

As we can guess, this session will use clustered index seek operation in the execution plan.

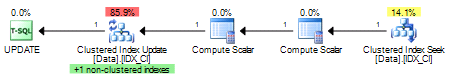

The second session will read the same row using nonclustered key value

Because Col1 is not part of the nonclustered index, we would have nonclustered index seek and key lookup operations in the execution plan:

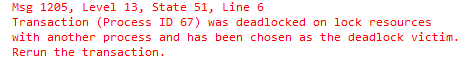

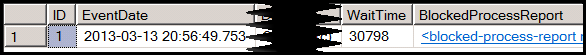

Both statements are running in the loop just to emulate concurrent access to the data. In just a few seconds, we will have the deadlock and session with select would be chosen as the deadlock victim.

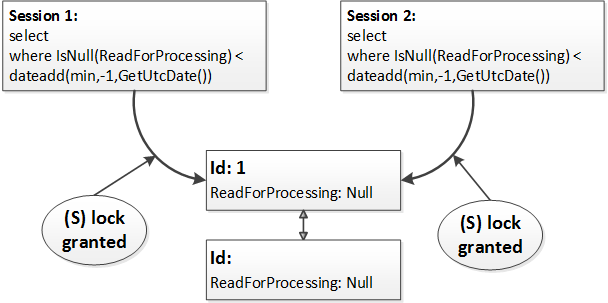

At the first glance, this looks confusing. Both sessions are dealing with the same row. We would expect to have the blocking cases due to exclusive (X) and shared (S) lock incompatibility for the duration of the transaction although we do not expect the deadlock. However, even if we are dealing with the single row, there are two indexes involved.

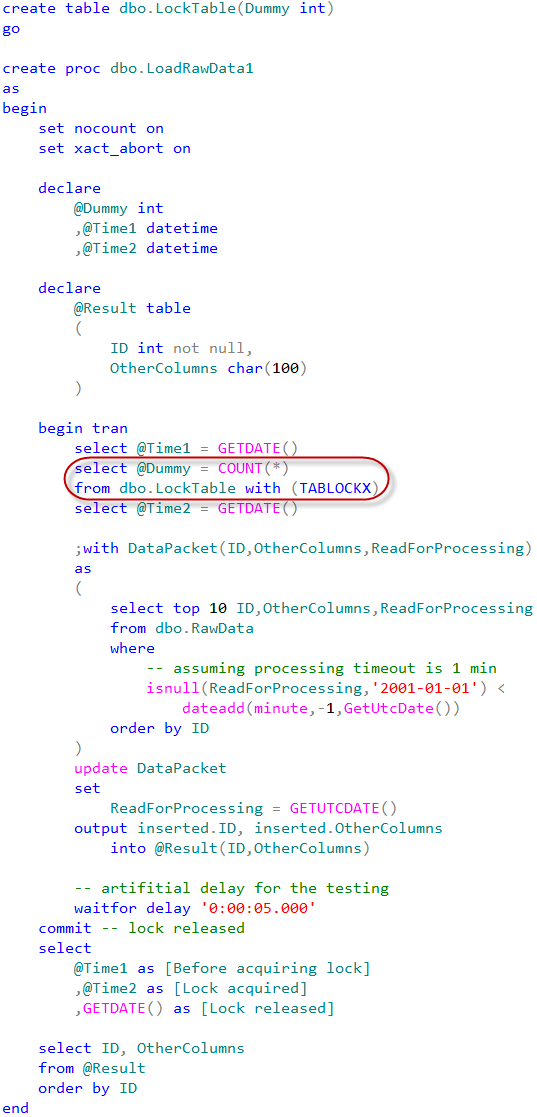

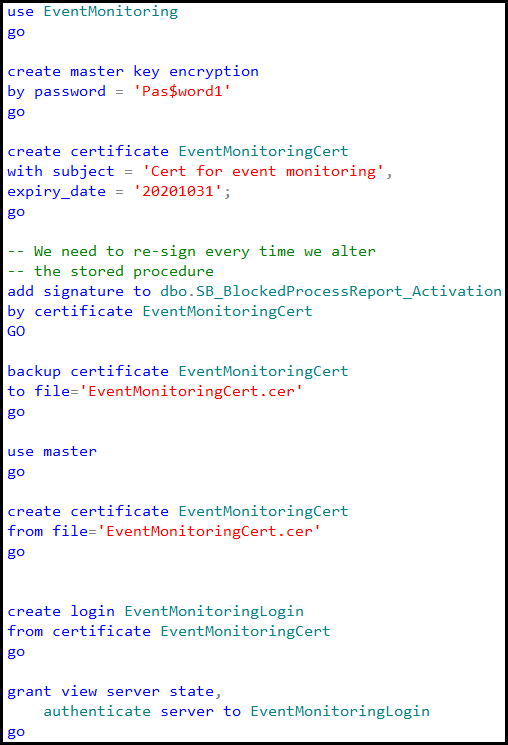

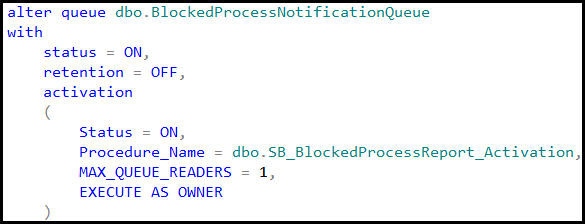

Let us take a look what locks SQL Server acquires when the table has the multiple indexes. First, let us update the column, which does not belong to nonclustered index, and see what row-level locks will be held.

As we see, there is only one exclusive (X) lock on the clustered index. Col1 is not part of nonclustered index and as result, SQL Server does not need to update it and acquire the lock there.

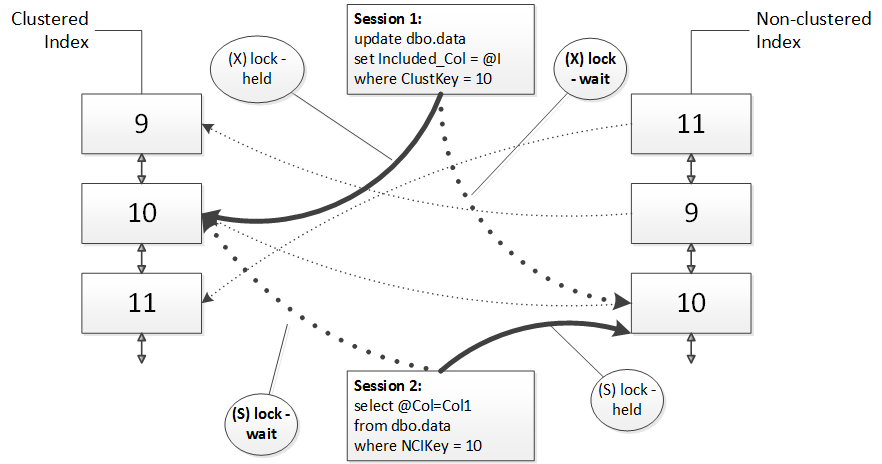

Let us see what happen, if we update the column, which is included to the nonclustered index.

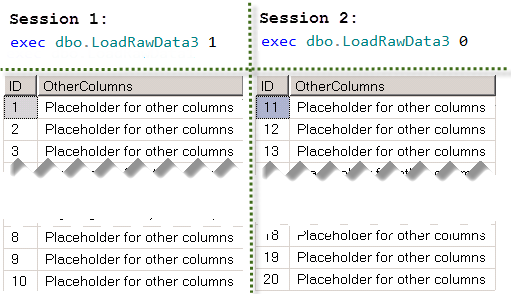

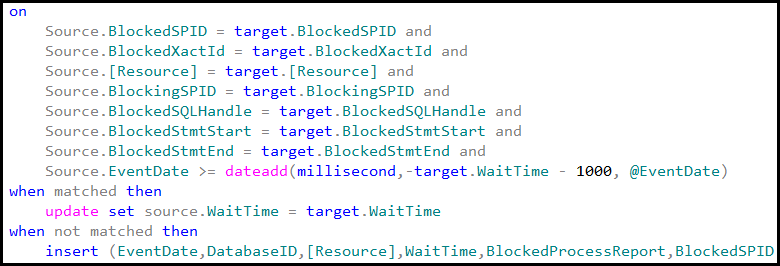

As we see, now we have two locks in place – one on each index key. And the point here is that we run such update, SQL Server would lock the row in one index first and another index after that. The sequence depends on the execution plan and in our case it would acquire exclusive (X) lock on the clustered index first.

Similarly, our select statement also acquires two shared (S) locks. First, it would lock the row in non-clustered index and then acquire the lock on the clustered index during key lookup operation.

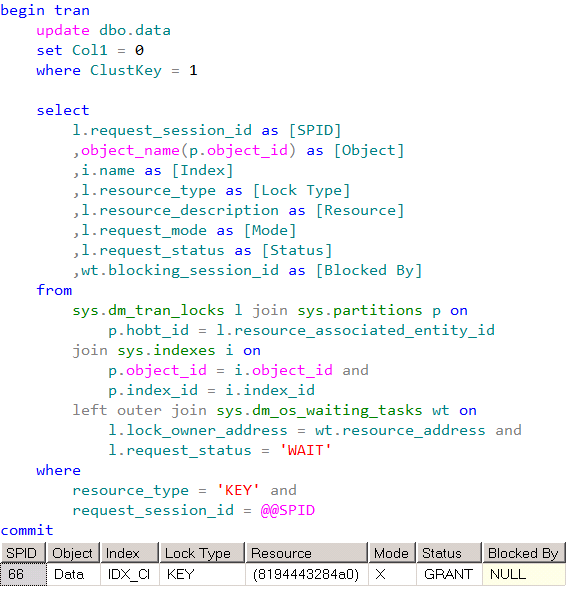

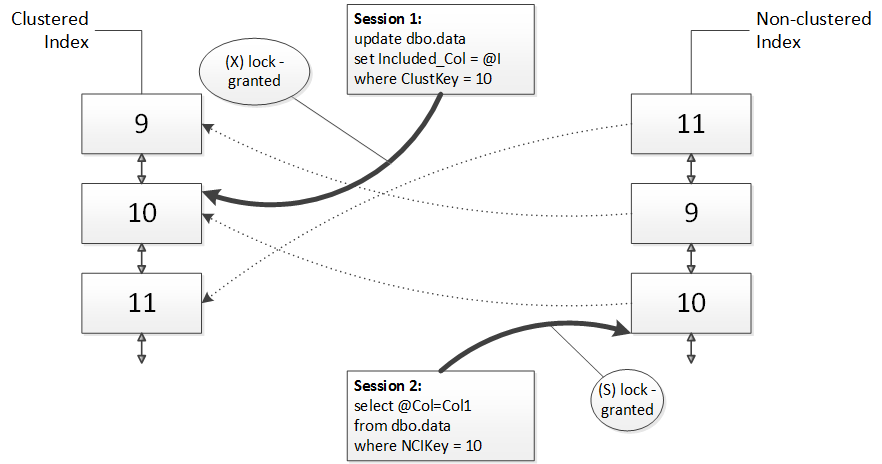

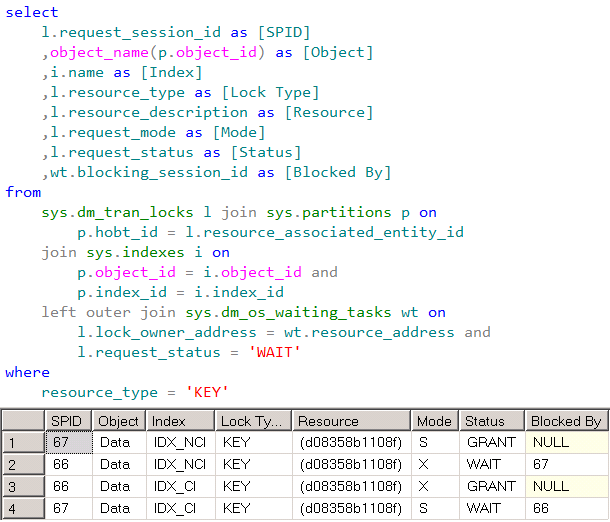

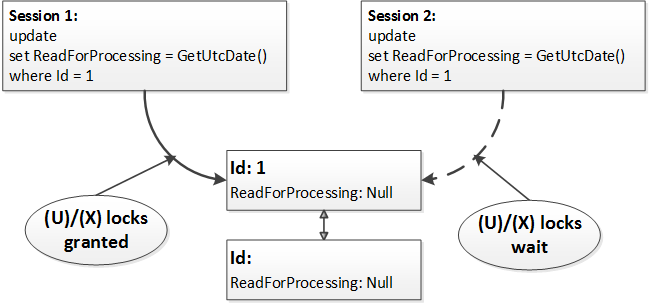

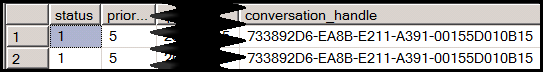

That should give us the idea why we have the deadlock. Both statements are running simultaneously. In the first step, update statement acquires exclusive (X) lock on the row in the clustered index and select statement acquires shared (S) lock on the row in the nonclustered index

After that, update statement is trying to acquire the exclusive (X) lock on the nonclustered index row and being blocked because there is the shared (S) lock held. Same thing happens with select statement, which is trying to acquire shared (S) lock on the clustered index row and being blocked because of the exclusive (X) lock held. Classic deadlock.

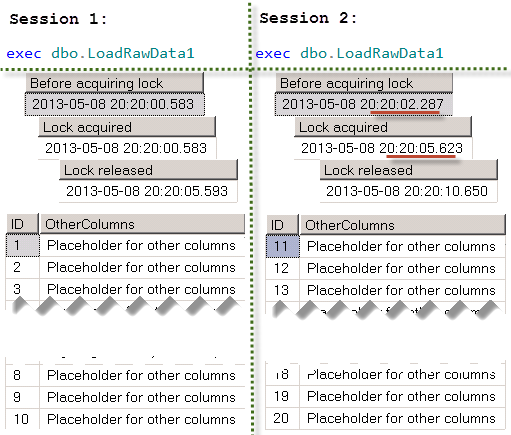

To prove that, we can run the statement that shows the current row-level locks immediately after we run our original two sessions. If we are lucky, we can catch the state when both sessions are blocked before deadlock monitor task wakes up and terminate one of the sessions.

There are a few ways that can help to eliminate the deadlock. First option would be eliminating key lookup operation by adding Col1 as included column to the index. In such case, select statement does not need to access the data from the clustered index, which will solve the problem. Unfortunately, that solution increases the size of the nonclustered index key row and introduce additional overhead during data modifications and index maintenance.

Another approach would be switching to optimistic isolation levels where writers do not block readers. While it can help to solve blocking and deadlocking issues, it would also introduce additional tempdb overhead and increases the fragmentation.

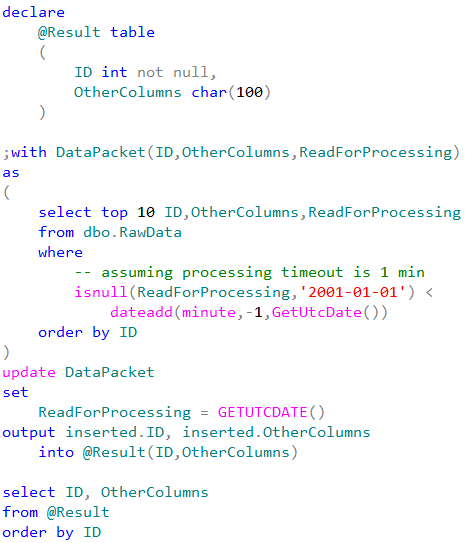

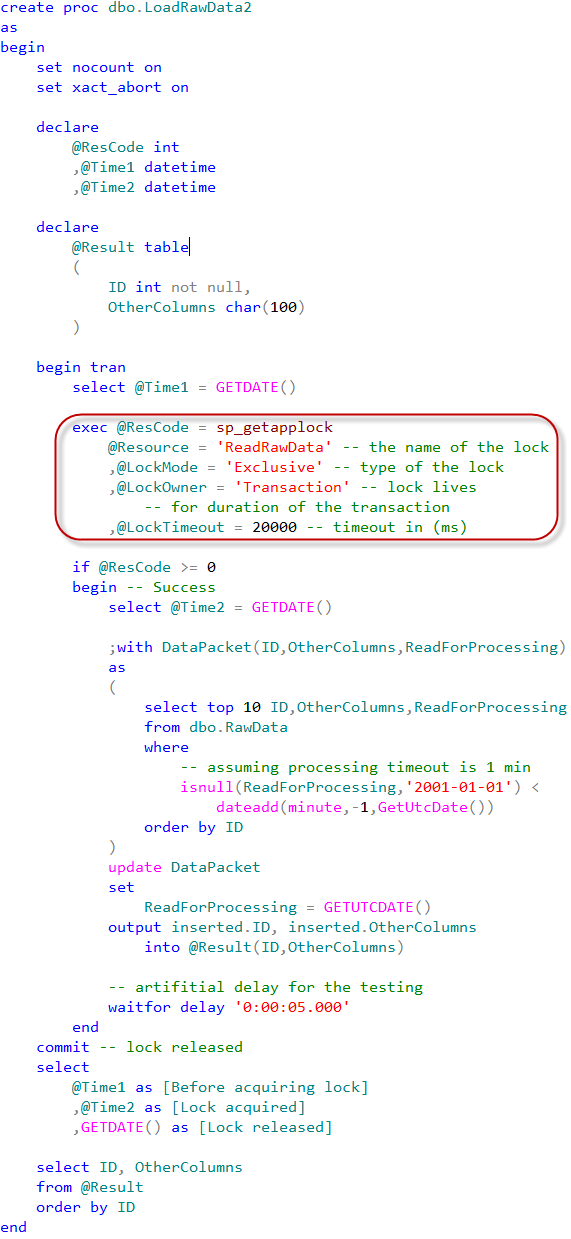

Finally, we can refactor the code and separate nonclustered index seek and key lookup operations to two separate selects

Both select statements are working on the single index scope and as result would not hold shared (S) locks on the both indexes simultaneously when we are using read committed transaction isolation level. Although, this solution would not work in repeatable read and serializable isolation levels where shared (S) locks held until the end of the transaction.

Source code is available for download