One of the most challenging issues for developers who don’t live in RDBMS world is how to make the system working seamlessly in multi-user environment. The code which works perfectly in development and QA starts to fall apart when dozens of users access the system. There are timeouts, deadlocks and other issues that developer cannot even reproduce in house. It does not really matter that SQL Server uses row level locking, that transaction isolation level set to read uncommitted – locking, blocking and deadlocking still occurs.

Today I’m going to start the series of the posts about locking in Microsoft SQL Server. I’ll try to explain why blocking and deadlocks occur in the system, how you can troubleshoot related problems and what should you do in order to minimize it. We will cover different transaction isolation levels and see how and why it affects behavior of the system. And talk about quite a few other things.

Update (2018-01-23): Consider to read Part 21: Intro into Transaction Management and Error Handling first

So let’s start with the lock types. What is the lock? In short, this is in-memory structure (64 bytes on 32 bit OS or 128 bytes on 64 bit OS). The structure has the owner, type and resource hash that links it to the resource it protects (row, page, table, file, database, etc). Obviously it’s more complicated and has quite a few other attributes, but for our practical purposes that level of details is enough.

SQL Server has more than 20 different lock types but for now let’s focus on the most important ones.

- Shared locks (S). Those locks acquired by readers during read operations such as SELECT. I’d like to mention that it happens in most part of the cases but not all the time. There are some cases when readers don’t acquire (S) locks. We will talk about it later.

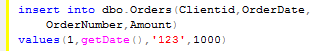

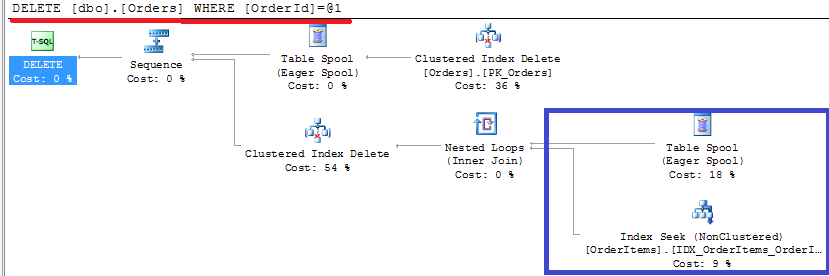

- Exclusive locks (X). Those locks acquired by writers during data modification operators such as Insert, Update or Delete. Those locks prevent one object to be modified by the different sessions. Those locks are always acquired and held till end of transaction

- Update locks (U). Those locks are the mix between shared and exclusive locks. SQL Server uses them with data modification statements while searching for the rows need to be modified. For example, if you issue the statement like: “update MyTable set Column1 = 0 where Column1 is null” SQL Server acquires update lock for every row it processes while searching for Column1 is null. When eligible row found, SQL Server converts (U) lock to (X).

- Intent locks (IS, IX, IU, etc). Those locks indicate locks on the child objects. For example, if row has (X) lock, it would introduce (IX) locks on page, table and database level. Main purpose of those locks is optimization. This about situation when you need to have exclusive access to the database (i.e. (X) lock on database level). If SQL Server did not have intent locks, it would have to scan all rows in the all objects and see if there are any low level locks acquired.

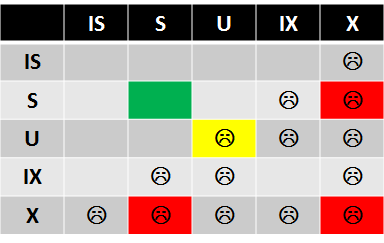

Obviously the biggest question is lock compatibility. If you open MSDN site you’ll see nice and “easy to understand” matrix with more than 400 cells. But for our practical purpose let’s focus on the smaller version:

So what we need to remember are basically 3 things:

- (S) locks are compatible with (S) and (U) locks.

- (X) locks are incompatible with any other lock types

- (U) locks are compatible with (S) but incompatible with (U)

Simple enough. Next time we will look at transaction isolation levels and see how it affects lock behavior.