Before we start talking about Data Partitioning within the same database, I’d like us to discuss a couple methods of partitioning by scaling-out our data and servers. So, first of all, let’s think about the situation when we have all our data in the same database/table.

This is quite general multi-tier client architecture nowadays. Obviously we can have client software (especially legacy one) which is connecting to the database directly.

As we already discussed, almost every system stores two different kinds of data – operational and historical. So what if we do something like that:

In this schema, we keep all operational data and catalog entities in the main database and move historical data to the separate SQL Server(s). We define the linked servers which will allow us to join historical data with catalog entities as well as union the data from the different servers in some cases. Data placement is transparent to the clients which access the data through application server – “the must have” in such architecture. Application server knows how data is stored, queries the data from the multiple databases, merges the streams and returns the final dataset to the clients. Technically speaking, we can even have performance advantages by querying the data in parallel – each database connection could be done from the separate thread.

Legacy software that queries database directly is usually OK too – as long as software does not need access to historical data. Even if historical data is needed, it could be sometimes workarounded by creating distributed partitioned views.

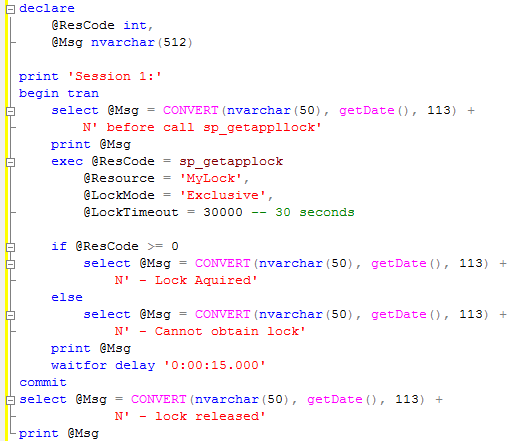

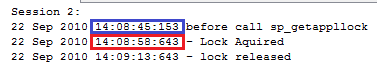

The biggest drawback of this design is additional complexity and overhead introduced by developing the code that combines data from the multiple sources. E.g. application server and, sometimes, database code. Everything would depend on what do we need to do with the data. Some cases are very simple (think about code like that running in the single database/table solution):

With the new multiple servers we will need to open multiple database connections and run those statements against multiple servers:

And finally merge the data for the client. We can even have Customers list cached on application server somewhere and avoid cross-server joins. Simple enough. Although what if we look for something like that – find most expensive orders, page the results and return the second page to the client? (Small disclaimer – it’s not the best way of doing paging. Check this post for more information)

As you can imagine, there are no easy ways to implement it. We need to either write the query that collects the data from the multiple databases via linked servers and sort/page it after that. Alternatively, we can do it on application server side which could lead to the complex code that does not perform well enough. It’s also worth to mention that linked servers could introduce the own set of the issues from both, performance and development standpoints.

The biggest problem I see with such design is that requirements are rarely static – things tend to change. I have this architecture implemented in one of my systems and while things were relatively simple at the beginning, newer requirements led to the huge development overhead. But everything, of course, is system-specific and in “It depends” category. That design has its own purpose and use-cases. And we will talk about them later.