Heap tables are tables without a clustered index. The data in heap tables is unsorted. SQL Server does not guarantee nor maintain any sorting order of the data in the heap tables.

When we insert data into heap tables, SQL Server tries to fill pages as much as possible, although it does not analyze the actual free space available on a page. It uses the Page Free Space (PFS) allocation map instead. SQL Server errs on the side of caution, and it uses the low value from the PFS free space percentage tier during the estimation.

For example, if a data page stores 4,100 bytes of data, and, as result, has 3,960 bytes of free space available, PFS would indicate that the page is 51-80 percent full. SQL Server would not put a new row to the page if its size exceeds 20 percent (8,060 bytes * 0.2 = 1,612 bytes) of the page size.

Let’s look at the example and create the table with the code shown below.

create table dbo.Heap(Val varchar(8000) not null);

;with CTE(ID,Val) as

(

select 1, replicate('0',4089)

union all

select ID + 1, Val from CTE where ID < 20

)

insert into dbo.Heap(Val)

select Val from CTE;

select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent

from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.Heap'),0,null,'DETAILED');

At this point, the table stores 20 rows of 4,100 bytes each. SQL Server allocates 20 data pages—one page per row—with 3,960 bytes available. PFS would indicate that pages are 51-80 percent full.

As the next step, let’s inserts the small 111-byte row, which is about 1.4 percent of the page size. As a result, SQL Server knows that the row would fit into one of the existing pages (they all have at least 20 percent of free space available), and a new page should not be allocated.

insert into dbo.Heap(Val) values(replicate('1',100));

select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent

from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.Heap'),0,null,'DETAILED');

Lastly, the third insert statement needs 2,011 bytes for the row, which is about 25 percent of the page size. SQL Server does not know if any of the existing pages have enough free space to accommodate the row and, as a result, allocates the new page. You can see that SQL Server does not access existing pages by checking the actual free space and uses PFS data for the estimation.

insert into dbo.Heap(Val) values(replicate('2',2000));

select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent

from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.Heap'),0,null,'DETAILED');

That behavior leads to the situation where SQL Server unnecessarily allocates new data pages, leaving large amount of free space unused. It is not always a problem when the size of rows vary—in those cases, SQL Server eventually fills empty spaces with the smaller rows. However, especially in cases when all rows are relatively large, you can end up with large amounts of wasted space.

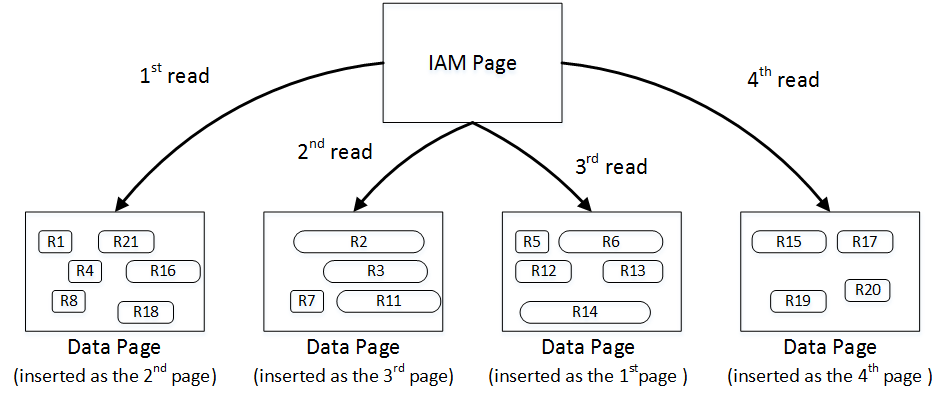

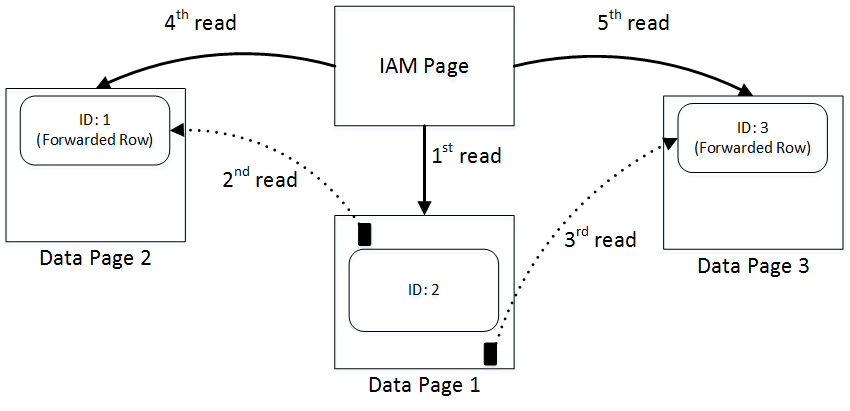

When selecting data from the heap table, SQL Server uses as Index Allocation Map (IAM) to find the pages and extents that need to be scanned. It analyzes what extents belong to the table and processes them based on their allocation order rather than on the order in which the data was inserted. You can see it in figure below.

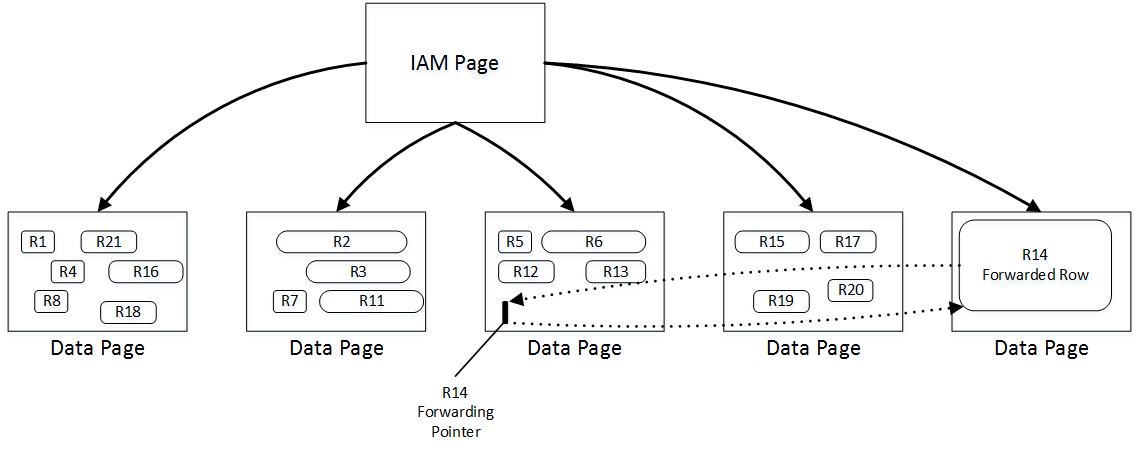

When you update the row in the heap table, SQL Server tries to accommodate it on the same page. If there is no free space available, SQL Server moves the new version of the row to another page and replaces the old row with a special 16-byte row called a forwarding pointer. The new version of the row is called forwarded row.

There are two main reasons why forwarding pointers are used. First, they prevent updates of nonclustered index keys, which referencing the row.

In addition, forwarding pointers helps minimize the number of duplicated reads – the situation when a single row is read multiple times during the table scan. As the example, let’s look at figure above and assume that SQL Server scans the pages in left-to-right order. Next, let’s assume that the row in page 3 was modified after the page was read at the time when SQL Server reads page 4. The new version of the row would be moved to page 5, which has yet to be processed. Without forwarding pointers, SQL Server would not know that the old version of the row had already been read, and it would read it again during the page 5 scan. With forwarding pointers, SQL Server would ignore the forwarded rows.

Forwarding pointers help minimize duplicated reads at cost of additional read operations. SQL Server follows the forwarding pointers and reads the new versions of the rows at the time it encounters them. That behavior can introduce an excessive number of I/O operations.

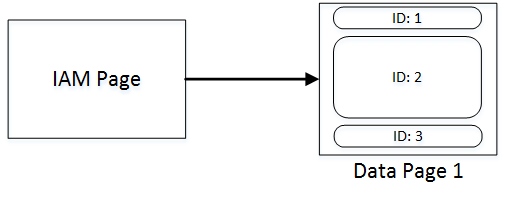

Let’s create the table and insert three rows there.

create table dbo.ForwardingPointers

(

ID int not null,

Val varchar(8000) null

);

insert into dbo.ForwardingPointers(ID,Val)

values

(1,null),

(2,replicate('2',7800)),

(3,null);

select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent, forwarded_record_count

from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.ForwardingPointers'),0,null,'DETAILED');

set statistics io on

select count(*) as [RowCnt] from dbo.ForwardingPointers

set statistics io off

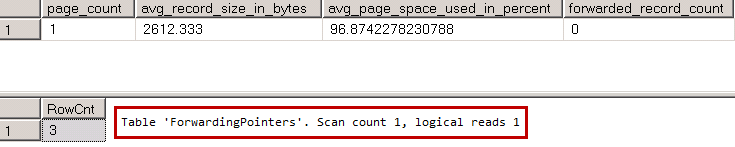

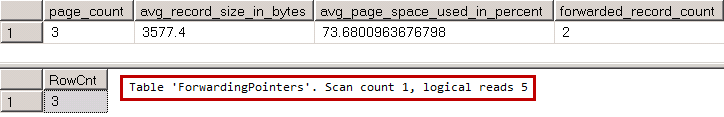

You can see results below

06. Forwarding Pointers: I/O without forwarding pointers

All three rows fit into the single page, and SQL Server needs to read just that page when it scans the table.

Let’s update two of the table rows and increase their size. The new version of the rows would not fit into the page anymore, which introduces the allocation of the two new pages and two forwarding pointers.

update dbo.ForwardingPointers set Val = replicate('1',5000) where ID = 1;

update dbo.ForwardingPointers set Val = replicate('3',5000) where ID = 3;

select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent, forwarded_record_count

from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.ForwardingPointers'),0,null,'DETAILED');

set statistics io on

select count(*) as [RowCnt] from dbo.ForwardingPointers

set statistics io off

When SQL Server reads the forwarding pointer rows from page 1, it follows them and reads pages 2 and 3 immediately thereafter. After that, SQL Server reads those pages one more time during the regular IAM scan process. As a result, we have five read operations, even though our table has just three data pages.

It does not look as bad in case of the small table. Let’s look at the same issue in case, when table has more rows. Let’s insert 65,536 rows to our table.

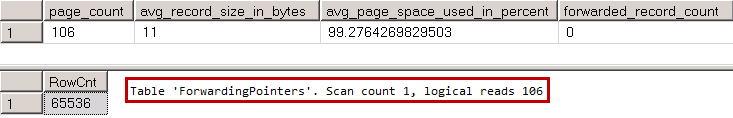

truncate table dbo.ForwardingPointers go ;with N1(C) as (select 0 UNION ALL select 0) -- 2 rows ,N2(C) as (select 0 from N1 as T1 cross join N1 as T2) -- 4 rows ,N3(C) as (select 0 from N2 as T1 cross join N2 as T2) -- 16 rows ,N4(C) as (select 0 from N3 as T1 cross join N3 as T2) -- 256 rows ,N5(C) as (select 0 from N4 as T1 cross join N4 as T2) -- 65,536 rows ,IDs(ID) as (select row_number() over (order by (select NULL)) from N5) insert into dbo.ForwardingPointers(ID) select ID from IDs; select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent, forwarded_record_count from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.ForwardingPointers'),0,null,'DETAILED'); set statistics io on select count(*) as [RowCnt] from dbo.ForwardingPointers set statistics io off

As you see, there are 106 pages in the table and as result, SQL Server performs 106 reads during IAM scan. Let’s update our table and introduce forwarding pointers.

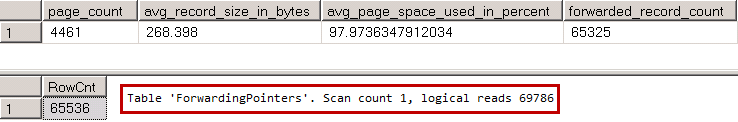

update dbo.ForwardingPointers set Val = replicate('a',500);

select page_count, avg_record_size_in_bytes, avg_page_space_used_in_percent, forwarded_record_count

from sys.dm_db_index_physical_stats(db_id(),object_id(N'dbo.ForwardingPointers'),0,null,'DETAILED');

set statistics io on

select count(*) as [RowCnt] from dbo.ForwardingPointers

set statistics io off

Now our table has 4,461 pages however it requires almost 70 thousand reads to perform a scan. As you see, the large number of the forwarding pointers leads to extra I/O operations and significantly reduces the performance of the queries accessing the data.

When the size of the forwarded row is reduced by another update and the data page with forwarding pointer has enough space to accommodate updated version of the row, SQL Server might move it back to original data page and remove the forwarding pointer. Nevertheless, the only reliable way to get rid of the all forwarding pointers is by rebuilding the heap table. You can do that by using an ALTER TABLE REBUILD statement or by creating and dropping a clustered index on the table.

Heap tables can be useful in staging environment where you want to import a large amount of data into the system as fast as possible. Inserting data into heap tables can often be faster than inserting it into tables with clustered indexes. Nevertheless, during a regular workload, tables with clustered indexes usually outperform heap tables due to their suboptimal space control and forwarding pointers.

Awesome article! I can’t believe updates are able to fragment so much a table.

Could you make an article about best practices of configuring the creation of custered and nonclustered indexes and how and when to rebuild or drop and recreate them?

Hi Hikari,

The simple answer – always create the clustered index and do not use heaps (maybe some ETLs are the exception) 🙂 As for how to create and maintain clustered and nonclustered indexes – it is much bigger topic. You need to take multiple factors into consideration and tune it for your particular system and workload.

I put a lot of info into my Pro SQL Server Internals book – maybe it would be helpful..

Sincerely,

Dmitri

As you know, the clustered index should be: Narrow, Unique, Static(never updated) and Ever-increasing.

I think create a clustered index on a customer ID is good idea, because that is unique and static and narrow.

The only problem is that the IDs will not be inserted to the table incrementally.

This cause insert speed will decrease and also index fragmentation.

If we consider an identity column for clustering, our queries aren’t specifically using this value.

What is the solution to this problem?

Hi Mehdi,

For the catalog entities, such as Customers, Articles, etc where you do not insert hundreds of rows per second, I would not necessarily worry about having index autoincrementing. Just rebuild it from time to time, tune FILLFACTOR a bit and you would be fine.

With all being said, analyze the size of the key – because I expect that you’d have plenty of FK here. It maybe more efficient in some cases to switch to identity in the table and in all referencing tables if your Customer ID is not narrow. This will improve performance of the joins in the system.

As usual, “it depends” 🙂

Sincerely,

Dmitri

Thanks a lot Mr Dmitri !

When we create CCI and nonclustered index on a table, how the nonclustered works ?

Whats happen when we search a specific row (key lookup or rowid lookup or etc) ?

Hi Mehdi,

It is similar to nonclustered index B-Tree on a heap table and RID lookup. Nonclustered indexes store row-id of the row in CCI – basically the combination of partition-id. rowgroup-id and offset. It’s a bit more complicated due to bitmap array and delete filter check (SQL Server needs to exclude already deleted rows) but all regular considerations (selectivity, # of estimated Key Lookups) apply here.

My 2nd edition of Pro SQL Server Internals has a lot of details on internals in the area. I’ll need to do the blog post one day. 🙂

Sincerely,

Dmitri

Excellent !

Another question !

How can create CCI on the Table with a LOB column at SQL server 2016 or 2014?

What is vertical partitioning?

I don’t want move LOB column to another table.

Thanks !

You cannot have LOBs in CCI in 2014/2016. It is supported in 2017 only. So your choices are either another table or switching to (8000) data types, perhaps with compression. With all being said, I’d argue that LOB columns are not good for columnstore anyway. What are you trying to accomplish?

I suspect that you are trying to use columnstore in OLTP system in order to save space. It is not the best choice. You can update columnstore but it does not scale well for OLTP workload. You’ll have quite a few interesting issues if data is volatile.

I would consider data partitioning here with CCI and B-Tree/In-Memory OLTP tables and partition views here.

No, i am not trying to use columnstore in OLTP system in order to save space.

If i want create columnstore index in OLTP,i must use filtered NCCI.

Thank you very much, for :

you would consider data partitioning here with CCI and B-Tree/In-Memory OLTP tables and partition views here.

How i choose the partition key at the following Table:

Create table tbl

(id int not null primary key, fname varchar(20), lname varchar(50), Registerdate datetime )

go

insert into tbl values (1, ‘Dmitri’, ‘Korotkevitch’, ‘2018-05-15 07:43:14’)

go

I want to partition the table Based on year for example (2015, 016, 2017, 2018, 2019).

I would not use horizontal partitioning on catalog entities. I would target large and volatile OLTP tables that store main stream of transactions (Orders, Events, Documents, etc). Something in hundreds millions, billions rows range.

Those tables usually have some kind of dates, which I would use as the criteria. OrderDate, EventDate, etc. So yes, your strategy based on year is correct. You need to decide right granularity though – for example, if data becomes static after 1 month, I would keep 1-2 months in B-Tree/In-Memory OLTP and move everything else to different table(s), which may be partitioned differently (yearly, for example).

OK, that was an example! i can not move data to different table. i think, i must add a computed column to events table and so i partition the table on computed column. this mean use computed column for partition key.

alter table [events] add ClPartitonkey as cast(year(Eventdate) as int ) persisted.

What is your opinion in the above scenario?

Why do you need to have surrogate key rather than partition by EventDate? You can use the range of partition column values without any issues.

In majority of cases, surrogate key would not help you to eliminate partitions from processing, unless you use it as query predicate. Partitioning by EventDate would be the better solution from that angle. It will also helps to reduce size of the data – partition column would become the part of CI and, therefore, all NCIs.

Excellent !

When EventDate is part of the clustered index, your solution is very good.

EventDate can be the best candidate for the partition key.

How to partition the master-details table when the primary key is the cluster?

Should I consider the primary key as a non-cluster?

For example, in the َAdventureworks database, [Sales].[SalesOrderHeader] and [Sales].[SalesOrderDetail].

How can we partition these two tables.

Thank you for the information!