Enterprise Edition of SQL Server is all about availability features. It allows you to build robust High Availability and Database Maintenance strategies, which are the must for the mission critical systems. Unfortunately, all those benefits come at cost. At very high cost. Enterprise Edition of SQL Server is anything but cheap. Enterprise Edition licenses usually several times more expensive comparing to the Standard Edition licenses. It applies to both, on-premises and Cloud installations. For example, in Microsoft Azure, VMs with Enterprise Edition are about 4 times more expensive comparing to Standard Edition VMs.

Not every business can afford Enterprise Edition. And, to be honest, not every business even need Enterprise edition. It is entirely possible to build relatively big and complex solutions with Standard Edition of SQL Server especially with SQL Server 2014, which can utilize 128GB of RAM. I have personally worked with several multi-TB databases using Standard Edition of SQL Server.

One of the common challenges with non-Enterprise Editions, however, is Index Maintenance. Enterprise Edition allows you to rebuild indexes keeping table online. It uses row-versioning similar to optimistic transaction levels under the hood. With exception of short-time shared (S) at the beginning and schema-modification (Sch-M) locks at the end of the execution, there are no full table locks held. Other users can access the table, and read, and modify data from there.

Unfortunately, this is not the case with the Standard Edition where only offline index rebuild is supported. Offline index rebuild acquires schema modification (Sch-M) lock for the duration of the execution, which blocks entire access to the table even in read uncommitted transaction isolation level. Based on the index size, rebuild can be very time consuming operation. Obviously, you can use index reorganize instead of rebuild., which is online operation. However, it does not work as good as index rebuild on heavily fragmented tables.

Today, I am going to show you very simple technique that can help to decrease offline index rebuild time, and, therefore, time when table is inaccessible for the other sessions. It is not the magic silver bullet that helps everywhere. In fact, in some of the cases, it could even hurt rather than help. We will discuss that later in the post.

I am going to use a table from one of my production systems. That table has a couple dozen columns including one nvarchar(max) column, which is hosting some LOB data. I have rebuilt the index before the tests making sure that initial fragmentation does not affect execution time on the first test run.

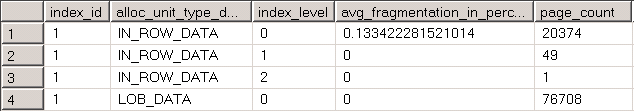

As you see in Figure 1 below, leaf level of the clustered index for IN-ROW data consist of 20,374 pages, which is about 159MB of data. LOB data consists of 76,708 pages, which is about 600MB of data.

As the first step, let’s run ALTER INDEX REBUILD statement and measure execution time for the operation. I am clearing buffer pool emulating a cold cache before the test (DO NOT RUN IT IN PRODUCTION!). This is, obviously, the worst case scenario – in the real life, at least some of the data pages would be cached at time of the index rebuild operation.

I am also running index rebuild without parallelism involved just to make sure that results are consistent and simplify the analysis. As the side note, my test server has pretty slow I/O subsystem, and execution time without MAXDOP=1 hint is just about the same as with the hint.

-- Clearing buffer pool. DO NOT RUN IN PRODUCTION checkpoint go dbcc dropcleanbuffers go -- Rebuilding Index alter index IDX_CI on dbo.MyTable rebuild with (maxdop = 1)

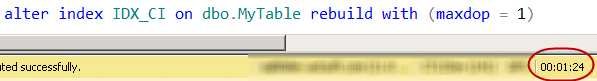

As you can see in Figure 2, index rebuild time in my system is 1 minute and 24 seconds. As you know, other sessions would be unable to access the table during this time due to schema modification (Sch-M) lock held on the table.

Let’s repeat our test (again, with cold cache) and analyze session waits during the index rebuild. We can do it by creating Extended Event session with the code shown below. This code will work in SQL Server 2012 and 2014 and will require slight modifications in SQL Server 2008/2008 R2.

create event session [Waits] on server add event sqlos.wait_info ( action (sqlserver.session_id) where ( sqlserver.session_id = 141 -- @@SPID of the session with index rebuild) and opcode = 1 -- END of wait ) ) add target package0.event_file(SET filename=N'C:\ExtEvents\Waits.xel',max_rollover_files=(0)) with ( max_memory=4096 KB ,event_retention_mode=allow_single_event_loss ,max_dispatch_latency=10 SECONDS ) go alter event session [Waits] on server state = start

After index rebuild command, let’s stop the session and look at the waits that occur during index rebuild.

alter event session [Waits] on server

state = stop

go

;with EventData(event_data)

as

(

select cast(event_data AS XML) AS event_data

from sys.fn_xe_file_target_read_file

('C:\ExtEvents\Waits*.xel',null,null,null)

)

,Waits

as

(

select

event_data.value ('(/event/@timestamp)[1]'

, 'datetime') as [Time],

event_data.value ('(/event/data[@name=''wait_type'']/text)[1]'

,'varchar(255)') AS [Wait],

event_data.value ('(/event/data[@name=''duration'']/value)[1]'

,'bigint') AS [Duration],

event_data.value ('(/event/data[@name=''signal_duration'']/value)[1]'

,'bigint') AS [Signal]

from EventData

)

select

w.[Wait]

,count(*) as [Count]

,sum(w.Duration) as [Total Duration (ms)]

,sum(w.Duration) - sum(w.Signal) as [Resource Waits (ms)]

,sum(w.Signal) as [Signal Waits (ms)]

from Waits w

group by w.[Wait]

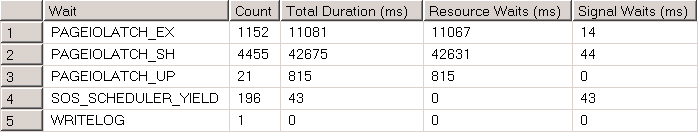

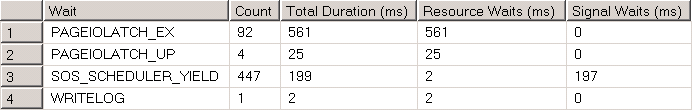

As you can see in the Figure 3 below, the vast majority of the waits are PAGELATCHIO*, which occur when SQL Server is waiting for the data page to be read from the disk.

That discovery can point us to the right direction. If large portion of index rebuild was waiting for the data to be read from disk, we could speed up the process by pre-fetching and caching data in the memory. SQL Server would not need to perform physical read operations, which should reduce rebuild time.

Let’s try that and run the following select below (again, making sure that we are using cold cache for test sake):

checkpoint go dbcc dropcleanbuffers go declare @T1 bigint, @T2 bigint select @T1 = avg(RecId) -- Making sure that IN_ROW pages are read ,@T2 = avg(len(LOB_Column)) -- Making sure that LOB pages are read from dbo.MyTable with (nolock, index=IDX_CI) option (maxdop 1)

I am forcing the scan of the clustered index – the one, which we are going to rebuild. I am using read uncommitted transaction isolation level with NOLOCK hint. As you can guess, in this situation I do not worry about any data consistency issues that can arise.

However, there is very important part – we need to make sure that SQL Server reads LOB data pages in addition to IN_ROW data pages. We can force it by accessing our LOB column in the select list. You can use the same technique dealing with ROW_OVERFLOW pages in case, if index has such allocation units.

Finally, I am also referencing one of columns from IN_ROW data to make sure that those pages are also read into the memory. In some cases, with the large tables and read uncommitted transaction isolation level, SQL Server can choose to use allocation unit scans instead of index scan. I seriously doubt, that SQL Server can perform such operation and scan only LOB data pages in our select, especially with the index hint in use, but it is better to be safe than sorry.

The execution time of the select in my system is 1 minute and 3 seconds as it is shown in Figure 4 below.

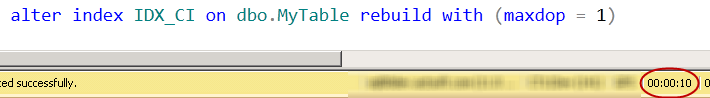

Now, let’s run index rebuild again. As you see, with index pages cached in memory, index rebuild time is just 10 seconds as it is shown in Figure 5. Even though, total time of both operations (select and index rebuild) are comparable with original index rebuild over cold cache, the time when table was inaccessible due to schema modification (Sch-M) lock is about 8 times less than before.

If you looked at the waits during the last index rebuild, you would see that PAGELATCHIO* waits practically disappeared as it is shown in Figure 6.

While this technique can significantly reduce blocking during offline index rebuild, it is not for everyone. First, and foremost, you should be careful in case of the large indexes especially when system has limited amount of memory and/or low page life expectancy. Caching large indexes will require significant amount of memory and, therefore, it could lead to the situations when you flush the buffer pool possibly flushing the index pages you just read. This is especially important if you expect data growth in the future. Small indexes today can become the large ones tomorrow.

Second, it is beneficial to analyze how many pages in the index are typically cached in memory. Obviously, if most part of the pages have been already cached, you would not get much gain from running the select and pre-fetching remaining pages to the memory. On the other hand, logical reads are fast anyway so select over warm cache would not take much time.

You can use the script below to analyze number of pages from the index that have been cached. You can compare those numbers with results of sys.dm_db_index_physical_stats to estimate the number of cached pages per allocation unit from the index.

select i.name, au.type_desc, count(*) as [Cached Pages]

from

sys.dm_os_buffer_descriptors bd with (nolock)

join sys.allocation_units au with (nolock) on

bd.allocation_unit_id = au.allocation_unit_id

join sys.partitions p with (nolock) on

(au.type in (1,3) and au.container_id = p.hobt_id) or

(au.type = 2 and au.container_id = p.partition_id)

join sys.indexes i with (nolock) on

p.object_id = i.object_id and

p.index_id = i.index_id

where

bd.database_id = db_id() and

p.object_id = object_id (N'dbo.MyTable') and

p.index_id = 1 -- ID of the index

group by

i.name, au.type_desc

Finally, that technique is useful when the biggest bottleneck in the system is I/O performance as it happened in my case. This often happens in the Cloud-based systems (SQL Server in VMs) where I/O latency is usually high and Standard Edition of SQL Server is commonly used due to its cost. In that scenario, pre-fetching data to the cache could significantly reduce the locking time for offline index rebuild when you have enough memory for the index data.

Pingback: How To Move Data and Log Files To Different Drives Keeping the Database Online in Non-Enterprise Editions of SQL Server | About Sql Server

Pingback: How to transfer data and log files of different engines, maintain a database on the internet in non-Enterprise editions of SQL Server on the Sql Server | InforWorldTech