Last time we discussed 2 design patterns that can be used when you store custom attributes in SQL Server database. Today I’d like to talk about another pattern known as Name/Value pairs and sometimes called as Entity-Attribute-Values.

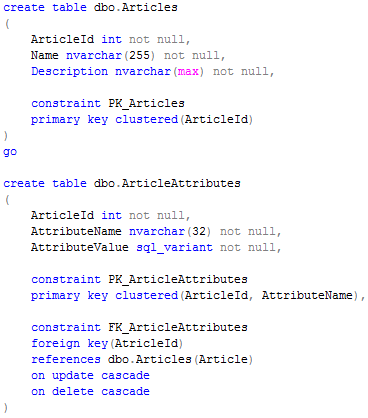

This pattern is very old and well known. Something like that (click on the image to open it in the new window):

I’m pretty sure that 75% of developers tried to use it in one way or another. I’ve seen quite a few different implementations. I even saw the implementation where entire database consisted of just 2 tables: Objects, with 2 columns – ID and ObjectType, and Attributes – similarly to what we saw above with exception that value was the string (it was prior to sql_variant days). And system even worked – kind of, in development and QA. Funniest thing – that system had even been sold and first customer was a wholesales company that replaced their existing point-of-sale system. Poor customers and happy consultants who were hired to “solve” the problem.. 🙂

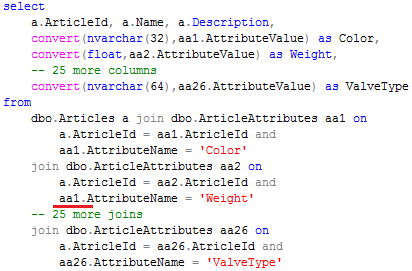

There is one killing factor though – you cannot store more than 8000 bytes in sql_variant. So (max) data types cannot be supported. If this is not the deal breaker – the design looks very flexible (and in fact it is). The general problem here is the attribute access cost. Classic approach produces 1 join per attribute. Something like that:

Could be inner join, could be outer – depends on the situation and design. But besides a lot of joins there is another problem. Developers are lazy. Everytime they need to write the statement like that, they would use cut and paste (see the nice red undeline above). And you can imagine amount of errors it could introduce.

Of course, when we talk about client application, we can select all attributes to the client as the rowset and pivot (remember this magic word) data there:

Unfortunately that would not solve the problem when we need to sort/page/filter by the attributes nor, more importantly, help us with reports. And customers demand reports.

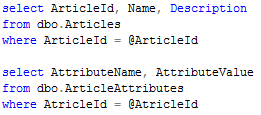

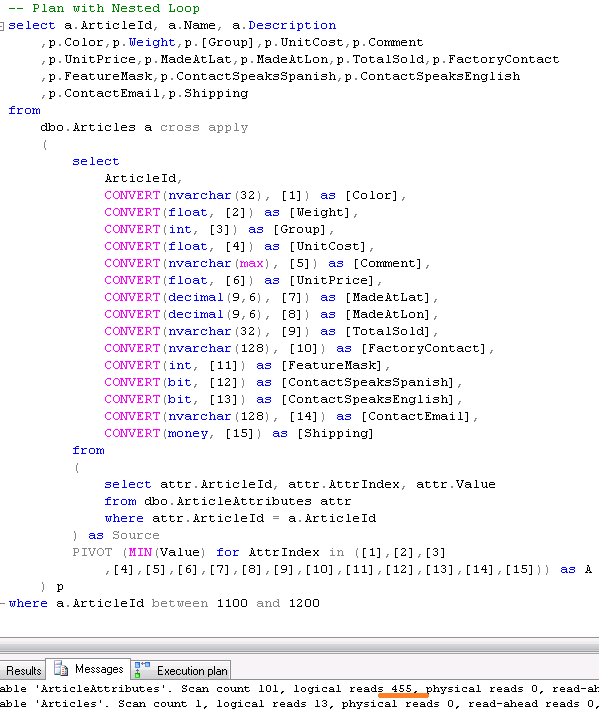

I’m not going to analyze that approach based on criteria we specified. I’ll show you the examples how attribute access cost in the implementation based on joins kills that. But there is another way. With SQL 2005 and above, you can use PIVOT which is part of T-SQL. So let’s take a look. First, let’s create Articles and ArticleAttributes table

As you probably noticed, I replaced Attribute Name with Index. This will add a little bit more complexity to the code but same time it saves us storage space. We are going to save a lot of records in that table. And that’s usual “It depends” question – is additional complexity worth that. There is also very good idea to keep some kind of “Metadata” table that stores some information about attributes and types. This is essential in case if you store attribute indexes but it also helps even in case if you store Attribute Names.

Now let’s populate it with the data

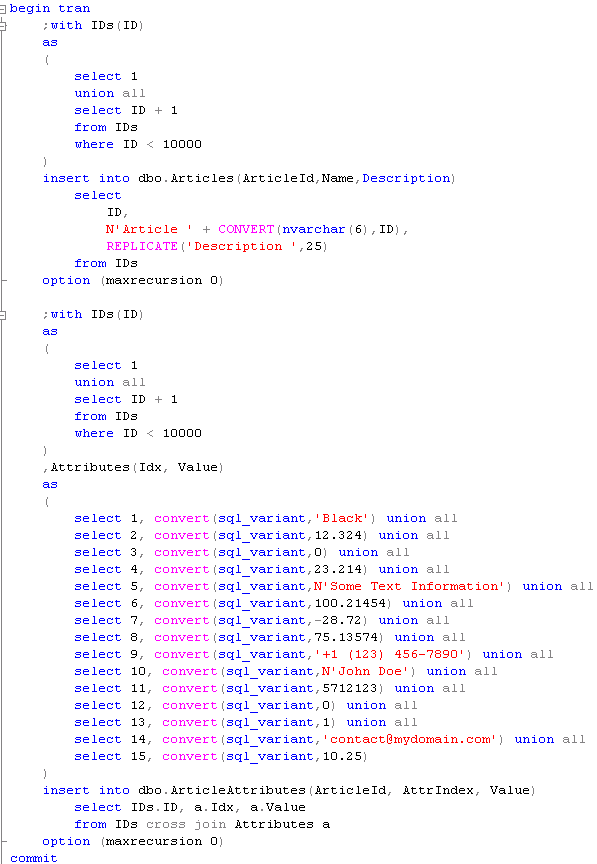

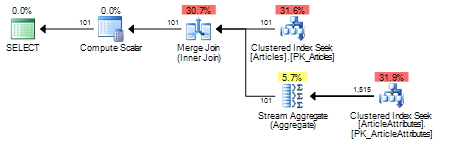

Let’s enable IO statistics and execution plan and see how it behaves when we need to access the data. First – classic approach with Joins:

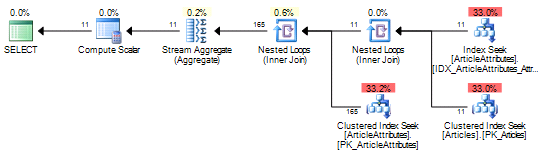

As you can see this introduces the plan with a lot of joins and quite a lot of IO. Now let’s try to reshape the query to use PIVOT.

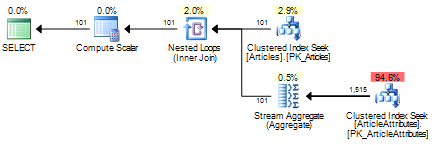

As you can see – that’s far far better. You can play with the shape of the query if you want to change execution plan – for example approach below gives you nested loop instead of merge join.

As you can see the difference in IO is dramatic.

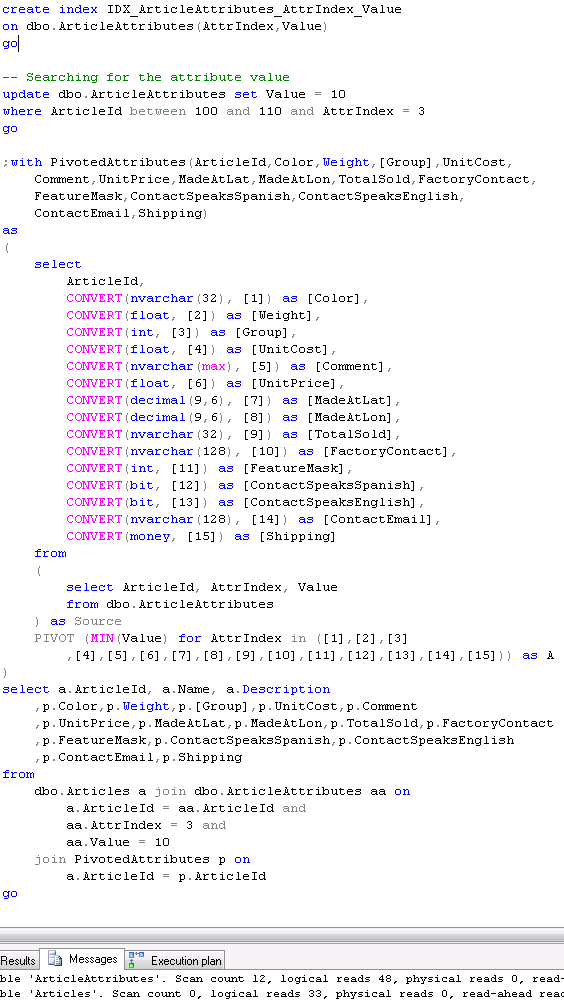

Let’s play with a couple other scenarios. What if we want to search for specific value in one of the attributes? Well, we need to create the index in such case.

The biggest problem here is the size of the key. With index it cannot exceed 900 bytes. Value (which is SQL Variant) can go far above that. So we basically have 2 choices. First either do not include Value to the index (or have it as included column) or perhaps, use filtered index and disable the (index) search for some fields. Even if first option does not look very promising, there is one thing to consider. Are there any other criteria for the search? If all your use cases include some additional columns in the query it could make sense to push those columns to Attributes table and make them part of the index. As the real-life example, assuming you’re collecting data and all your queries include time range. In such case you can push ATime column to Attributes table and make the index as (AttrIndex, ATime) include(Value). While it uses the range scan, it could be acceptable because of additional filter on ATime that limits number of records.

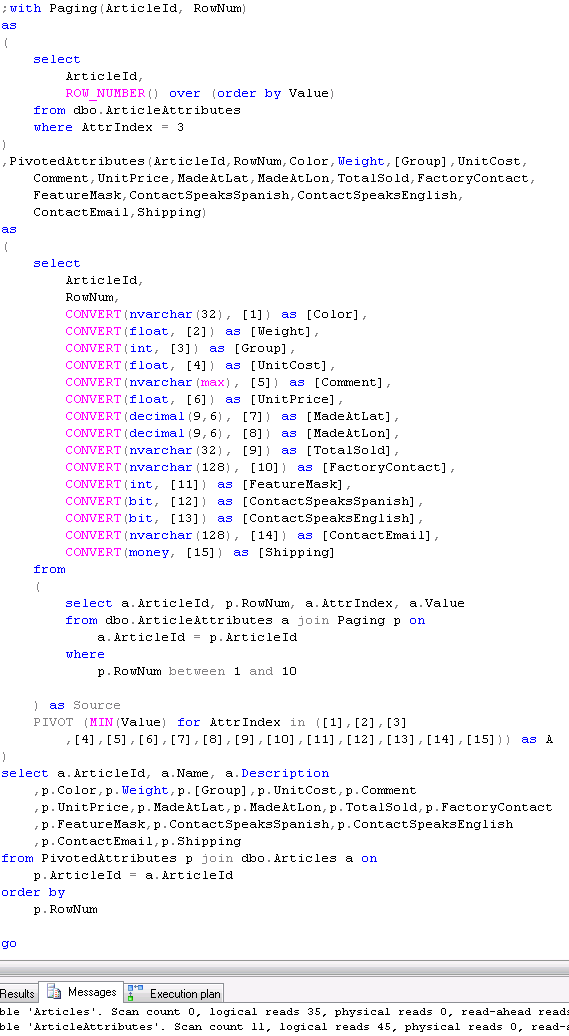

Another scenario – sorting/paging. Assuming you want to display 1 page of data (10 rows). You can do something like that:

Let’s go through the list of criteria for this approach:

- Multiple schemas support – Yes.

- Expandability. Yes.

- Online schema change. Yes. Although if customer needs to be able to change data type of the attribute some work is required.

- Storage cost. Medium to High depend on indexes and use cases

- Attribute access overhead. 1 join + PIVOT overhead

- Search-friendly. Yes with extra index. Possible issues with large values (900 bytes key size limitation)

- Sorting/Paging friendly. Same as above.

- Upfront knowledge about data schema. Required. Client needs to know about the schema in order to build PIVOT statement. On the server side dynamic SQL could be required

And the last one is the biggest limitation of the design. While it offers very good performance, you have to babysit the solution. You need to think about use cases to design queries and indexes. You need to maintain indexes – you’ll get excessive fragmentation there.

Next time will do some performance comparison of the various methods

Source code is available for download

P.S. I want to thank Vladimir Zatuliveter (zatuliveter _at_ gmail _dot_com) for his help with preparation of this post.

Great article! Two years on now. Have you anything new to share?

Hi Jack,

Thank you!

Well, I still owe performance comparison. Will do it sometime later this year.

Sincerely,

Dmitri

Very useful, thank you.

Thank you, Arash!

Hello Dmitri,

Thanks a lot for this useful article.

What are you your thoughts about using MongoDB (or any other Document DB) for this kind of requirements?

Thanks,

Bhushan

Hi Bhushan,

I believe that nowadays, proper data tier should combine multiple technologies, including NoSQL. Especially, on the Clouds.

The devil, is obviously, in details. While in many cases you can move some data to other engines, key question is transactional consistency. Can you afford the chance that data will become inconsistent – e.g. main document was saved but attributes did not. Or, if attributes are slightly out of sync (changes were committed in different time).

So, as usual, it depends 🙂

Sincerely,

Dmitri

Great post, any updates? 🙂

Thank you, Daniel!

I will probably update the post one day. But in reality, there is very little to do it. Nowadays, I perhaps would ask if you want to use relational DB to store such data to begin with 🙂

Sincerely,

Dmitri