Today I’d like us to talk about one practical problem related with the locking – how to deal with deadlocks during DDL operations. For example, table and partition functions alteration, partition switch and others. This problem is very common in OLTP environment when table is constantly querying by the multiple sessions. One of the common examples there is sliding window pattern when the table has been partitioned based on some time interval (daily, weekly, monthly, etc) and data has been purged on the regular basis by switching partition to the temporary table. Let’s try to understand why we have deadlocks here and what we can do to solve the problem.

First of all, as the pre-requirement, let’s assume that we implemented our partitioning correctly. Most important factor is that our operation does not require any data movement nor scans. For example, we don’t split ranges in partition function in the way that requires physically move the data to another partition (e.g. we are pre-allocating empty partition rather than splitting the range with the value at the middle of the partition that has some data). In that case partition operations are purely metadata operations and only schema locks are involved.

Another thing to avoid is mixing DML and DDL statements in one transaction. Let’s think about following example (let’s ignore page locks and schema stability (SCH-S) locks for simplicity sake):

- Session 1 starts transaction and updates 1 row in the table. E.g. at this stage we will have (X) lock on the row and (IX) lock on the table

- Session 2 is trying to select data from the table and being blocked by Session 1. So now we have Session 2 waiting for (S) lock on the row and also have (IS) lock acquired on the table

- Now session 1 is trying to implement partition switch. For that operation (SCH-M) lock needs to be acquired on the table and it would be blocked by (IS) lock from the Session 2.

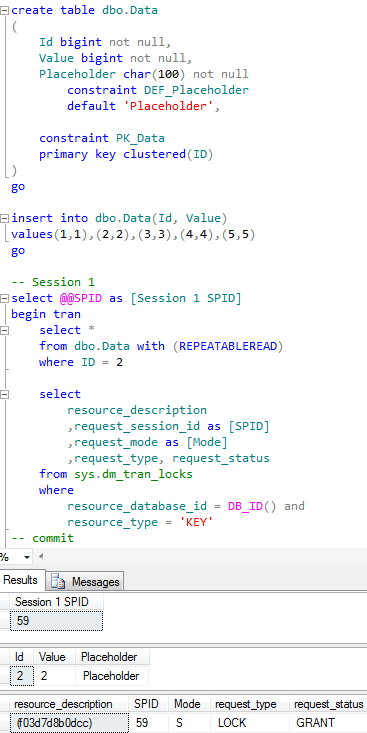

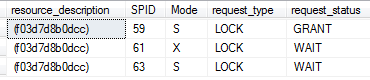

Classic deadlock. But let’s assume that this is not the case. What happens when we have multiple locks on the same object? Generally speaking, lock requests would be serialized. In some cases existing locks could be even re-used. Let’s see that. First, let’s create a table and add a few records there. Next, let’s have session 1 to read one row in repeatable read mode. As we remember it would hold (S) lock on the resource. Again, for simplicity sake let’s look at the row (key) level locks only

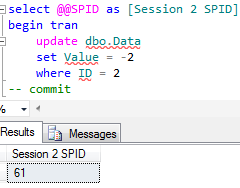

Now let’s try to update the row in another session. This session will try to acquire (X) lock on the resource and would be blocked because (X) lock is not compatible with (S) lock.

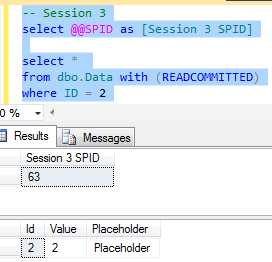

Now let’s try to read the same row in read committed mode from the 3rd session. As we can see everything works without blocking.

Let’s try to look at what locks were acquired using SQL Profiler.

As you see session acquired intent (IS) locks but did not acquire shared (S) lock. There is (S) lock from the session 1 already which guarantees that row has not been modified by uncommitted transactions. No reasons to acquire another (S) lock because in read committed isolation level (S) locks on the rows have been released immediately.

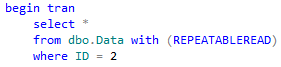

But what will happen if we try to read the row in repeatable read mode again? In that isolation level session needs to keep the (S) lock until end of transaction and it’s a reason why it’s trying to acquire the lock.

As you can see – it’s being blocked because there is (X) lock in the queue. So in order to be granted lock needs to be compatible with the all locks on that resource – does not matter granted or now.

It’s worth to mention that the first scenario, when session 3 ran in read committed mode and did not acquire the lock on the resource, can be considered as internal optimization. In some cases SQL Server still acquires another shared (S) lock there even if there is another shared (S) lock held. In such case the query would be blocked similarly to repeatable read transaction isolation level.

Keeping all this in mind we can ask – why would we have deadlocks if locks are serialized? Would not the session with schema modification (sch-m) lock wait till other locks are released and proceed from there? Yes and no. Let’s put one other thing to the mix – lock partitioning. This is quite confusing term and does not have anything to do with table partitioning. In nutshells when server has 16 or more CPUs (think about dual quad-core with hyperthreading enabled) SQL Server starts to partition intent (IS, IX, IU) and schema stability (SCH-S) object locks on per-cpu basis. Those (intent and schema-stability) locks would be acquired and stored within 1 (or a few) lock partitions only. And when another session needs to acquire S, X, U or SCH-M locks on the object, those locks would have to be acquired across all lock partitions. As I mentioned, this behavior is on when system has 16 or more CPUs and cannot be

disabled. So let’s take a look at one of the scenarios:

- Session 1 executes the complex query that acquired (IS) and/or (SCH-S) lock on the lock partition = 5. (just an example)

- Session 2 tries to alter the table and starts to acquire (SCH-M) locks on each individual lock partition. It successfully acquired locks on partitions 1-4 and blocked on partition 5 by (IS) or (SCH-S) lock held by Session 1

- Session 1 tries to acquire another (IS) and/or (SCH-S) lock on lock partition = 2. It’s blocked by (SCH-M) lock held by Session 2.

Classic deadlock again.

Unfortunately there is very little we can do about it. Lock partitioning cannot be disabled with documented approaches. There is the undocumented trace flag T1229 that does the trick although using undocumented trace flags are dangerous and not recommended in production.

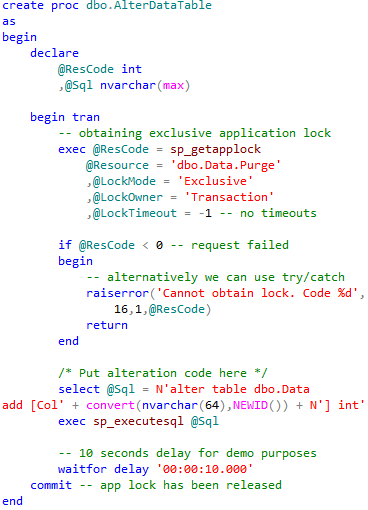

In case if you have dedicated data access tier (which is always the great idea) the good option would be serializing access to the table via application locks. Main idea of this method is that any code that accessing the object is acquiring application locks. DDL code acquires exclusive lock and DML code acquires shared lock. Locks would not be partitioned and as result requests would be serialized. Let’s take a look. First – let’s define the stored procedure that alter the metadata.

This is just an example. Obviously you need to think about timeouts, exception handling, retry logic and other things – but hopefully it would give you an idea.

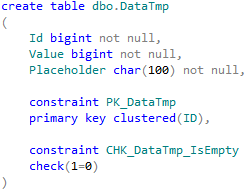

Now let’s try to create the stored procedure that reads the data from the table. But first, we need to define what should we do in case if we are blocked and have timeouts. Of course, we can return the error code to the client but another method that can be useful in some cases is to return empty result set. In order to do that let’s create another (empty) table of the same structure with original one.

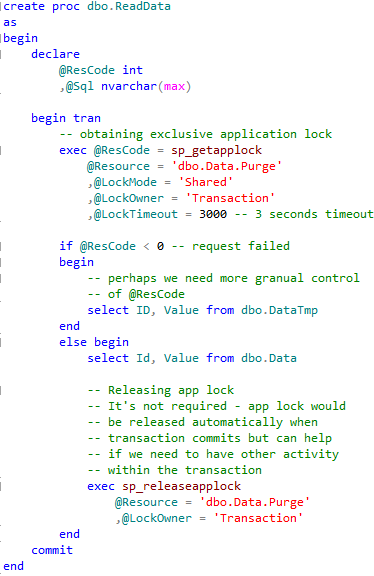

Now let’s define the stored procedure.

As you can see, if SP cannot obtain application lock within 3 seconds, it reads data from the empty table and basically returns empty result set to the client. Otherwise it would read the main table. Obviously the biggest “downside” of this method – it would not work well in the case if there is no dedicated data access tier in place and object accessed from the various places. Well, in such case old classic approach with try/catch and retry logic is your friend. And, of course, we can use SET DEADLOCK_PRIORITY to reduce the chance that the session with DDL statement would be chosen as the deadlock victim.

Source code is available for download

Next: When Transaction Start