As with any new technology, adoption of In-Memory OLTP comes at a cost. You need to acquire and/or upgrade to SQL Server 2014, spend time learning the technology and, if you are migrating an existing system, refactor code and test the changes.

Unfortunately, system refactoring can be complex and time consuming. SQL Server 2014 In-Memory OLTP has several important limitations, which can dramatically increase the cost of migration. To name just few – it does not support off-row storage limiting you to 8,060-byte rows nor support CHECK, UNIQUE, FOREIGN KEY constraints and triggers. All those limitations can be addressed by schema and code refactoring; however, in some cases, that refactoring can require significant amount of efforts.

Today, I would like to start the series of the blog posts discussing how we can address specific limitations that exist in the first release of In-Memory OLTP. I will start with supporting uniqueness and referential integrity in the system. Unfortunately, In-Memory OLTP does not allow you to define foreign keys nor unique indexes and constraints besides a primary key. Such limitation rarely prevents us from adoption of the new technology. Nevertheless, those constraints help to keep the data clean and allow to detect data quality issues and bugs in the code at early stages of development.

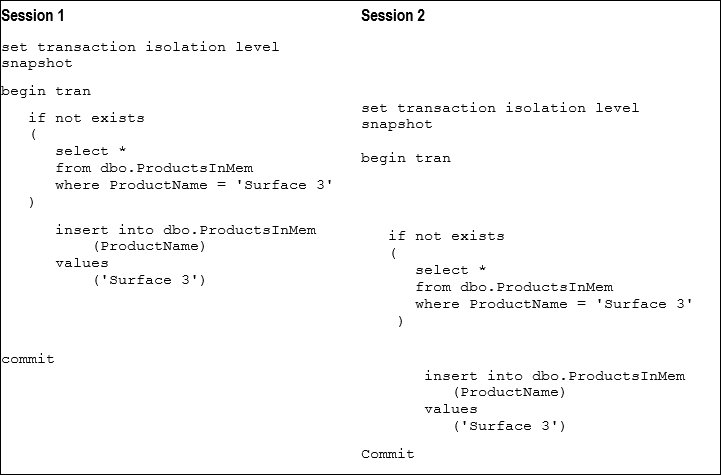

To make matter worse, lock-free nature of In-Memory OLTP makes the code approach tricky. In-Memory OLTP transactions do not see any uncommitted changes done by the other transactions. For example, if you ran the code from Figure 1 in default SNAPSHOT isolation level, both transactions would successfully commit without seeing each other changes violating ProductName uniqueness.

Fortunately, that situation can be addressed by using SERIALIZABLE transaction isolation level. As you remember, In-Memory OLTP validates serializable consistency rules by maintaining transaction scan set. As part of serializable rules validation, In-Memory OLTP checks for the phantom rows making sure that other sessions did not insert any rows that were previously invisible for the active transactions.

Code below creates memory-optimized table and natively compiled stored procedure that inserts data there running in SERIALIZABLE isolation level. Any inserts done through this stored procedure guarantee uniqueness of the ProductName even in multi-user concurrent environment.

The SELECT query builds transaction scan set, which will be used for serializable rule validation. That validation would fail if any other sessions inserted a row with the same ProductName while transaction is still active. Unfortunately, the first release of In-Memory OLTP does not support subqueries and it is impossible to write the code using IF EXISTS construct.

create table dbo.ProductsInMem

(

ProductId int not null identity(1,1)

constraint PK_ProductsInMem

primary key nonclustered hash

with (bucket_count = 65536),

ProductName nvarchar(64)

collate Latin1_General_100_BIN2 not null,

ShortDescription nvarchar(256) not null,

index IDX_ProductsInMem_ProductName nonclustered(ProductName)

)

with (memory_optimized = on, durability = schema_and_data);

create procedure dbo.InsertProduct

(

@ProductName nvarchar(64) not null

,@ShortDescription nvarchar(256) not null

,@ProductId int output

)

with native_compilation, schemabinding, execute as owner

as

begin atomic with

(

transaction isolation level = serializable

,language = N'English'

)

declare

@Exists bit = 0

-- Building scan set and checking existense of the product

select @Exists = 1

from dbo.ProductsInMem

where ProductName = @ProductName

if @Exists = 1

begin

;throw 50000, 'Product Already Exists', 1;

return

end

insert into dbo.ProductsInMem(ProductName, ShortDescription)

values(@ProductName, @ShortDescription);

select @ProductID = scope_identity()

end

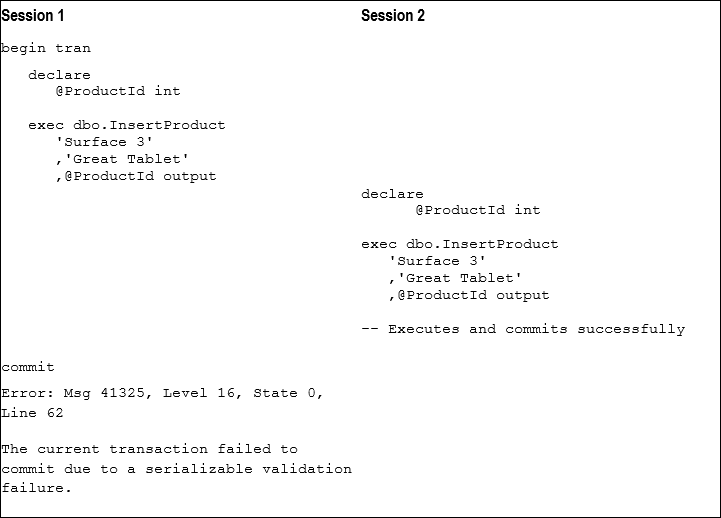

You can validate behavior of the stored procedure by running it in two parallel sessions as shown in Figure 2 below. Session 2 successfully inserts a row and commits the transaction. Session 1, on the other hand, would fail on commit stage with Error 41325.

Obviously, this approach would work and enforce the uniqueness only when you have full control over data access tier and have all INSERT and UPDATE operations performed through the specific set of stored procedures and/or code. INSERT and UPDATE statements executed directly against a table could easily violate uniqueness rules. However, you can reduce the risk by revoking INSERT and UPDATE permissions from the users giving them the EXECUTE permission on the stored procedures instead.

You can use the same technique to enforce referential integrity rules. Code below creates Orders and OrderLineItems tables and two stored procedures InsertOrderLineItems and DeleteOrders enforcing referential integrity between those tables. I am omitting OrderId update scenario, which is very uncommon in the real life.

create table dbo.Orders

(

OrderId int not null identity(1,1)

constraint PK_Orders

primary key nonclustered hash

with (bucket_count=1049008),

OrderNum varchar(32)

collate Latin1_General_100_BIN2 not null,

OrderDate datetime2(0) not null

constraint DEF_Orders_OrderDate

default GetUtcDate(),

/* Other Columns */

index IDX_Orders_OrderNum

nonclustered(OrderNum)

)

with (memory_optimized = on, durability = schema_and_data);

create table dbo.OrderLineItems

(

OrderId int not null,

OrderLineItemId int not null identity(1,1)

constraint PK_OrderLineItems

primary key nonclustered hash

with (bucket_count=4196032),

ArticleId int not null,

Quantity decimal(8,2) not null,

Price money not null,

/* Other Columns */

index IDX_OrderLineItems_OrderId

nonclustered hash(OrderId)

with (bucket_count=1049008)

)

with (memory_optimized = on, durability = schema_and_data);

go

create type dbo.tvpOrderLineItems as table

(

ArticleId int not null

primary key nonclustered hash

with (bucket_count = 1024),

Quantity decimal(8,2) not null,

Price money not null

/* Other Columns */

)

with (memory_optimized = on);

go

create proc dbo.DeleteOrder

(

@OrderId int not null

)

with native_compilation, schemabinding, execute as owner

as

begin atomic

with

(

transaction isolation level = serializable

,language=N'English'

)

-- This stored procedure emulates ON DELETE NO ACTION

-- foreign key constraint behavior

declare

@Exists bit = 0

select @Exists = 1

from dbo.OrderLineItems

where OrderId = @OrderId

if @Exists = 1

begin

;throw 60000, 'Referential Integrity Violation', 1;

return

end

delete from dbo.Orders where OrderId = @OrderId

end

go

create proc dbo.InsertOrderLineItems

(

@OrderId int not null

,@OrderLineItems dbo.tvpOrderLineItems readonly

)

with native_compilation, schemabinding, execute as owner

as

begin atomic

with

(

transaction isolation level = repeatable read

,language=N'English'

)

declare

@Exists bit = 0

select @Exists = 1

from dbo.Orders

where OrderId = @OrderId

if @Exists = 0

begin

;throw 60001, 'Referential Integrity Violation', 1;

return

end

insert into dbo.OrderLineItems(OrderId, ArticleId, Quantity, Price)

select @OrderId, ArticleId, Quantity, Price

from @OrderLineItems

end

It is worth noting that InsertOrderLineItems procedure is using REPEATABLE READ isolation level. In this scenario, we need to make sure that referenced Order row has not been deleted during the execution and REPEATABLE READ enforces that introducing less overhead than SERIALIZABLE isolation level.

That technique comes with another small benefit. It can demonstrate advantage of having dedicated data access tier to application developers helping to convince them to build one. All of us, database professionals, would like it, don’t we? 🙂

Source code is available for download.