No SQL Tips today. A couple notes from PASS Summit 2010 where Microsoft announced SQL Server code name “Denali”. There are a few interesting enhancements in T-SQL for that version.

1. Exception handling

SQL Server finally enhanced exception handling to make it more or less useful. No, there are still no such thing like “Finally” block but there is THROW operator that allows to throw and re-throw exceptions. It always comes with severity 16 and works from within code blocks – you can throw an error from the inner stored procedure and catch it in the outer stored procedure catch block. Good enhancement in compare with raiserror

2. Sequences

Nice to have feature even if it’s easy to implement similar mechanism right now with the separate table.

3. Contained databases

SQL Server “Denali” introduces “Partial” self-contained database mode. The long time roadmap is to be able to create the database which is self-contained and can be moved/deployed from one server to another and “just works”. It should include logins, linked servers, sql broker settings, etc. “Denali” technically support only “PARTIAL” mode that disconnects logins from the database (You will be able to create database user with password and SQL Server will be able to authenticate against this user). Obviously in this mode you will not be able to access any resources outside of the database. Another thing in this mode – objects in tempdb would use/be created in the same collation with the database.

In addition to that it introduces the DMVs show the breaches for the contained mode. It would include dynamic sql, references to other databases, linked servers, service broker queues, etc. Good feature, but not really useful for ISV who needs to support multiple SQL Server versions.

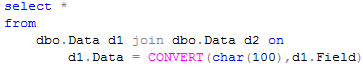

4. Better paging support

T-SQL will support selects like that:

<code>select ..

from Table

Order By Field

OFFSET 100 ROWS

FETCH NEXT 50 ROWS ONLY </code>

5. Ability to specify the shape of results from EXECUTE statement

You will be able to specify the format of the result sets that EXECUTE statement returns. Interestingly enough, Microsoft mentioned that you will be able to do it with sp_executesql but there is nothing about it in the documentation. Hope it would be supported especially because best practices suggest to use sp_executesql instead of EXEC

There are a few more features including UTF-16 support, different methods of metadata discovery, some enhancements in full-text search but nothing is really major

Will see and hope that new version of SQL Server would be really good.